Financial services major Citi remains gung-ho on Nvidia (NVDA), despite the wider muted sentiments around tech stocks now. If Citi's belief about the chip leader's numbers for Q4 is realized, it can dispel the notion of any weakness in the tech sector in general and can reactivate the company's share price, which has been under pressure so far this year.

Having a “Buy” rating with a $270 price target, Citi analyst Atif Malik stated in a client note that, "We model Jan-Q sales of $67B above Street $65.6B and [we] expect Apr-Q guide of $73B vs. Street $71.6B. We expect continued strong ramp of B300 with Rubin launch to drive a 34% H/H acceleration in CY2H26 sales vs. 27% in CY1H26. We believe most investors are looking past the earnings to [the] annual GTC conference in mid-March for NVIDIA to talk about [its] inference roadmap using Groq’s low latency SRAM IP and provide an early outlook for 2026/27 AI sales."

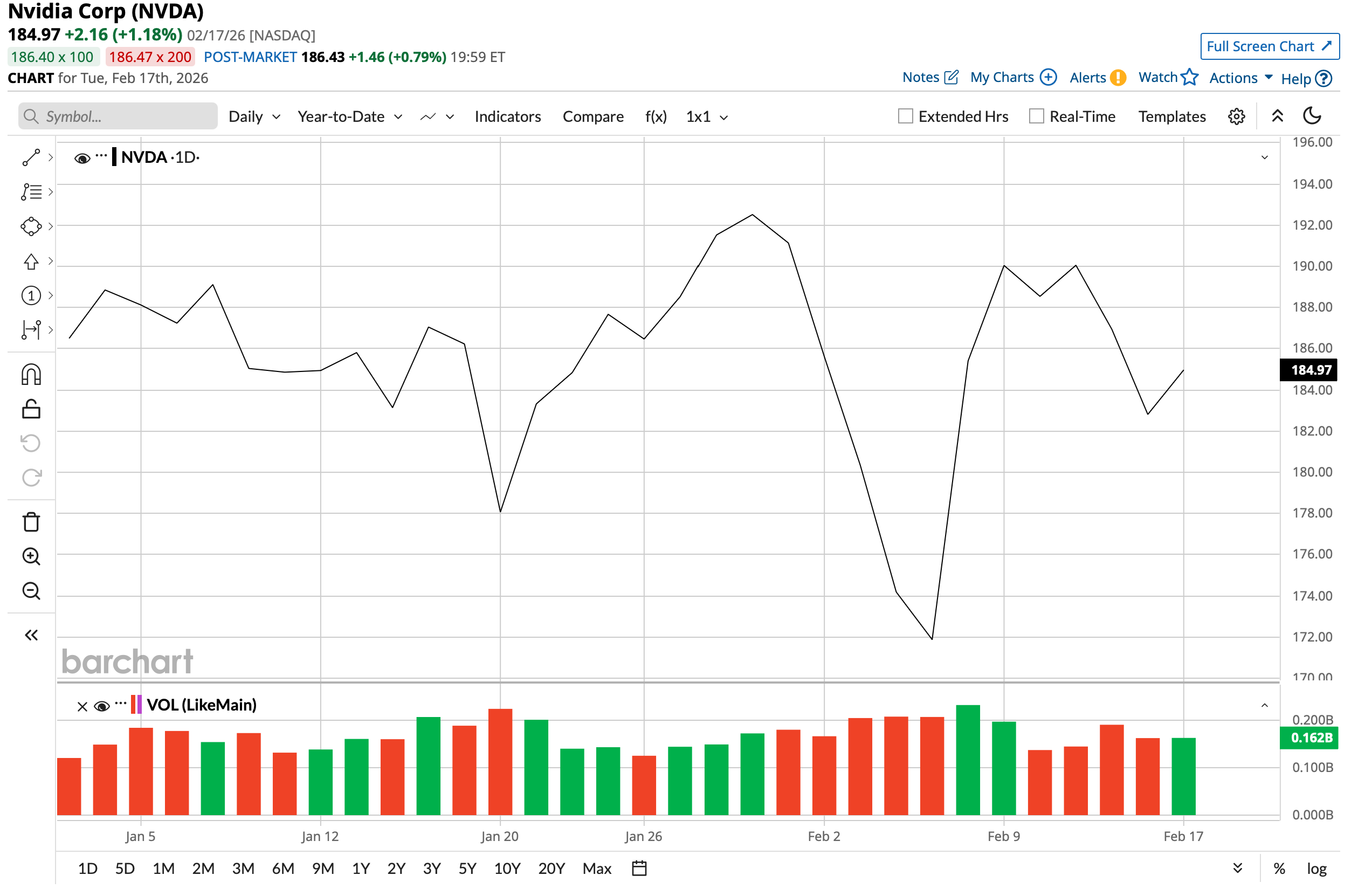

Malik's positive note comes at a time when Nvidia's stock has been a relative underperformer in 2026 when compared to the broader markets—a rarity for the company. While the shares of the most valuable company in the world by market cap ($4.4 trillion) are up a measly 0.87% on a YTD basis, the S&P 500's ($SPX) gains have been just about the same.

Routine Beat in Q3 & Expectations From Q4

Nvidia once again posted a very solid quarter for Q3 fiscal 2026, comfortably beating both revenue and earnings estimates while holding year-over-year (YoY) growth rates well above 50% across the key metrics.

The company recorded $57 billion in sales, which was 62% higher than the same quarter last year. Earnings per share climbed 60% to $1.30, clearing the $1.26 consensus estimate. The data center segment, still the primary engine of growth, grew by 66% to $51.2 billion.

Cash flow performance remained outstanding. Operating cash flow increased to $23.8 billion from $17.6 billion in the prior-year quarter, and free cash flow rose 64% to $22.1 billion. Nvidia ended the period with $60.6 billion in cash, short-term debt below $1 billion, and long-term debt of $7.5 billion, leaving cash more than eight times the long-term debt balance.

Notably, while the company's earnings have beaten Street expectations for nine straight quarters, its track record in the longer term is even more impressive. Over the past 10 years, Nvidia's revenue and earnings have displayed CAGRs of 44.06% and 66.66%, respectively.

For Q4, Nvidia expects revenue to be between $63.7 billion and $66.3 billion. Street expectations for the same stand at $65.63 billion. Meanwhile, EPS expectations are at $1.46, and gross margin projections are at about 75% for the quarter from the Street.

What Is Coming Next?

Nvidia has defined the AI revolution for almost five years now. Its chips are the de facto benchmark and are essential to power everything AI in this world. However, its dominant market position is certainly not leading to complacency creeping into the company as it readies its arsenal of offerings across hardware and software to continue to be the preferred partner for companies looking to enhance their AI credentials.

To that end, its next generation of GPU—the Vera Rubin—will be the central attraction. As the cornerstone of the platform, the Rubin R100 GPU represents a generational shift toward "Agentic AI" and large-scale Mixture-of-Experts (MoE) models. Built on TSMC’s (TSM) advanced 3nm (N3) process, each Rubin GPU packs 336 billion transistors—a 60% increase over the 208 billion in the Blackwell B200.

Notably, when compared to the previous Blackwell iteration, Rubin provides a massive leap in memory performance by being the first to adopt HBM4 memory. This transition expands memory bandwidth from Blackwell's 8 TB/s to an unprecedented 22 TB/s, a nearly 3x improvement that allows a single GPU to handle models with over a trillion parameters without needing to distribute data across multiple nodes. Furthermore, the interconnect speed has been doubled via NVLink 6, which offers 3.6 TB/s of bidirectional bandwidth. Against peers like the upcoming AMD (AMD) Instinct MI455X, which is projected to offer roughly 19.6 TB/s of bandwidth, Nvidia has aggressively optimized Rubin to maintain a lead in data throughput, ensuring that the "CUDA moat" is reinforced by hardware that eliminates the bottlenecks typical in high-speed AI reasoning.

Meanwhile, it's not just GPUs; Nvidia's full-stack ambitions also have the ConnectX-9. The ConnectX-9 is the latest iteration of Nvidia's specialized Network Interface Card (NIC), designed to facilitate ultra-low-latency communication between GPUs across a cluster. It operates at 800 Gb/s per port and uses the new NVLink 6 protocol to allow thousands of GPUs to work as if they were one giant processor. While previous versions focused on raw speed, the ConnectX-9 is better because it includes hardware-based "Adaptive Routing" and congestion control, which prevents data bottlenecks that often plague large AI training runs. Compared to standard Ethernet peers, it offers up to 1.6x higher networking efficiency.

And when it comes to software, Nvidia is not just CUDA. Here is where the Nvidia Inference Microservices, or NIM, comes in. Nvidia NIM is a software suite that provides "containers" for self-hosting AI models like Llama 3 or Nemotron. Instead of developers spending weeks optimizing a model for specific hardware, NIM automatically tunes the model to run at peak performance on whatever Nvidia GPU is available, from a desktop RTX to an H100 or Rubin. It is better than standard open-source deployment tools because it includes pre-integrated libraries like TensorRT-LLM, which can double the inference speed of a model out of the box. Compared to cloud-based API peers, NIM allows enterprises to keep their data on-premise, improving security while reducing latency. The cost benefit is substantial; by maximizing GPU utilization, NIM can lower the "cost per token" for AI responses by up to 5x, making it financially viable for companies to deploy AI agents at scale.

Analyst Opinion on NVDA Stock

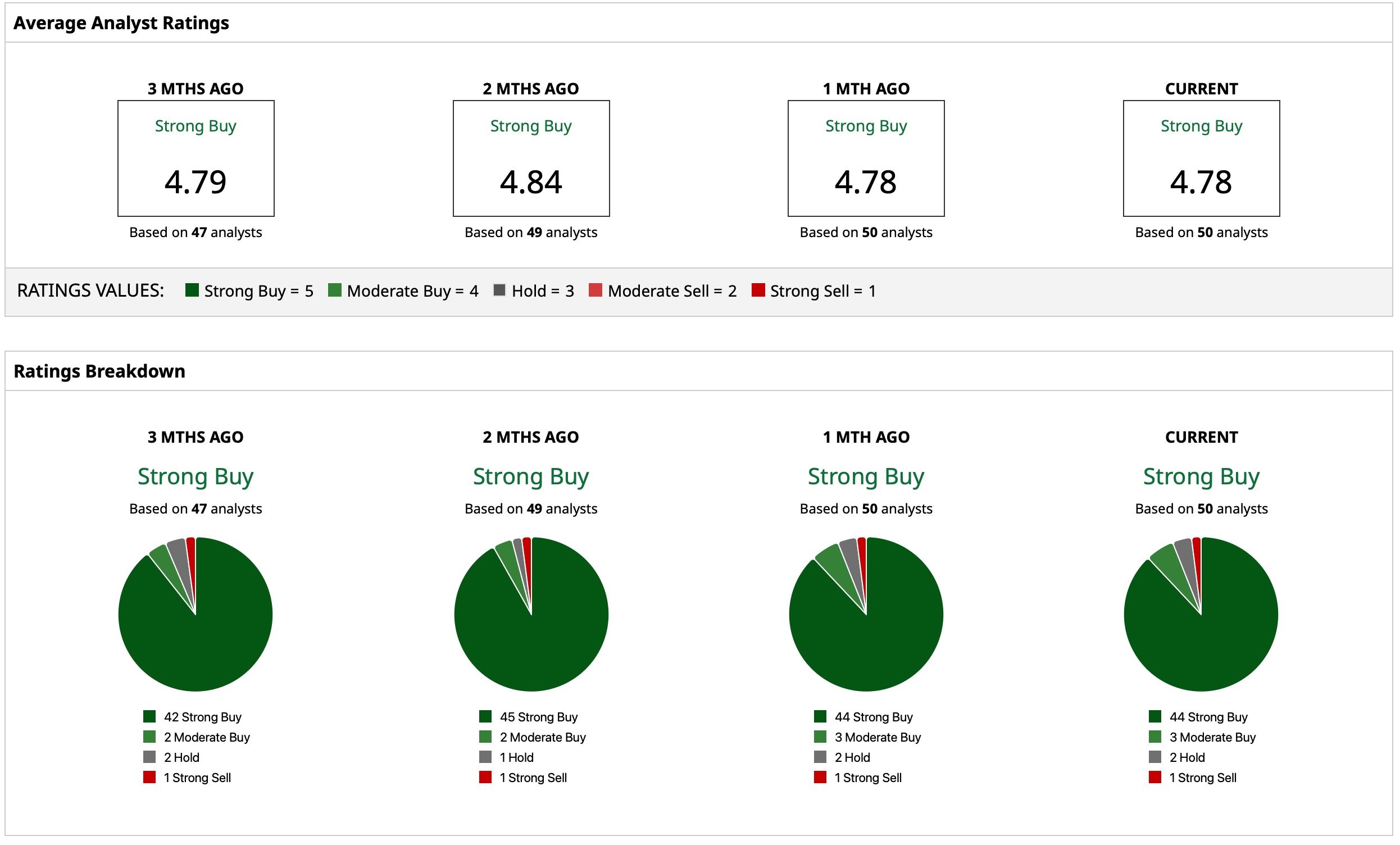

Overall, analysts have rated the NVDA stock a “Strong Buy,” with a mean target price of $255.55. This indicates that the stock still has an upside potential of about 38.2% from current levels. Out of 50 analysts covering the stock, 44 have a “Strong Buy” rating, three have a “Moderate Buy” rating, two have a “Hold” rating, and one has a “Strong Sell” rating.

On the date of publication, Pathikrit Bose did not have (either directly or indirectly) positions in any of the securities mentioned in this article. All information and data in this article is solely for informational purposes. For more information please view the Barchart Disclosure Policy here.

More news from Barchart

- Ford Recalibrates EV Strategy While Tesla Pivots To AI: Would the Bet Pay Off?

- Tesla Falters in China Again: How to Play TSLA Stock as Xiaomi Outsells

- It’s ‘Time to Shine’ for Applied Materials Stock, According to Analysts. Should You Buy AMAT Here?

- Up 40% in the Past Year, This Leading Stock Means Business